If you’re making a game that uses a fixed time step with interpolation (like the one described in “Fix Your Timestep”) then you may have noticed something slightly off with the frame timing. I’m not talking about slow frame rates or huge spikes, there’s nothing that can be done about those except optimizing. I’m not talking about what interpolation fixes either. This is a very subtle issue where even though you know your game should run at a solid vsynced 60 fps (or whatever the refresh rate) it still feels slightly wrong. The reason may be that your measured delta time is never exactly equal to the vsync interval. The context queue used by DirectX can make matters even worse causing a heartbeat like stutter of a long delta followed by a short delta. I’ve worked out a method to eliminate the stutter caused by these kind of fluctuations. But first let’s talk about why the measured time must be corrected for and the downsides to some of the most common approaches.

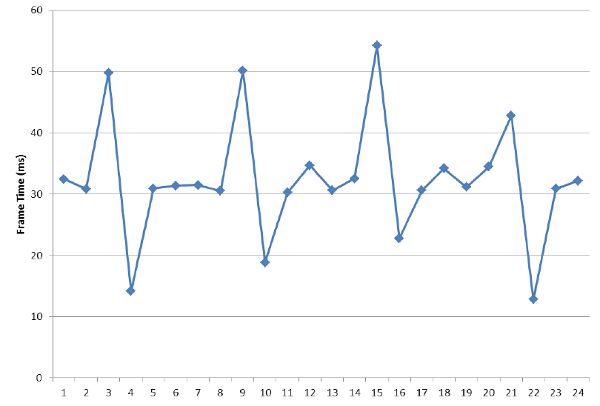

Example of frame time variance provided by AMD

If you are applying a time delta value based on timing from the previous frame that will cause it to have a variance from the monitor’s refresh rate. This is the most common and simple method of applying delta time. The deltas average out to be the same as the frame rate, but the actual samples can be anywhere between frames. Kind of like if instead of shooting a movie at a fixed frame rate you capture each frame somewhere in between where that frame and the next frame should be. When you watch the movie it still plays at the normal speed but each frame is shown at a slightly different time from when it was captured causing a bit of stutter.

Another option is to just use the vsync interval as the delta. This would work fine if you could always keep up with the refresh rate, the problem happens when you get far enough behind to start dropping frames. If you just add the vsync interval even though 2 or more have vsyncs passed it will cause a minor hiccup. Dropping a frame is bad but more importantly it also just slowed down to half speed for 1 frame which makes the pop infinity more noticeable. Things get worse when the fps is consistently low, we want the game to still run at a normal speed even on slower computers. The method outlined below fixes that by detecting how many vsyncs passed and rounding the delta up to that multiple of the vsync interval.

Disabling vsync doesn’t solve the problem either and can actually make it worse by introducing screen tearing. If you can run non-vsynced at an crazy high fps it will look slightly better because most of the renders are thrown away so the one that is actually shown ends up being closer to the correct time. But the monitor is still refreshing at a fixed rate so the timing still isn’t exactly correct and this isn’t a very practical solution because a complex graphical game can not keep that high of a frame rate. Bottom line it’s wasting cycles to render more frames then you need to.

Use the delta that will be not the delta that was!

Fortunately there is a simple solution to all of these problems; run vsynced and adjust the delta in advance, correcting for how much time will have passed when the frame actually gets displayed. We can calculate how many frames will have passed by the next vsync and round the delta up to the next frame rate multiple. We know that eventually by the time it gets rendered it will be a full frame because vsync is turned on so the delta is recalculated based on what it will be then. When the calculated frame count is 0 or less we still need to bump it up to a full frame, this is necessary for triple buffering to work because multiple updates can happen during the same vsync cycle to keep the context queue full so it must stay one or more frames ahead. It seems like without some way of frame delta correction there is no way triple buffering could work properly! The real trick is that when we adjust the delta like this we must also save that extra bit of time so it can be subtracted off the next delta. This code may look a bit similar to how the fixed time step pump works but don’t be confused because it must be kept as a completely separate thing that happens before (and in addition to) the fixed time step loop!

// this buffer keeps track of the extra bits of time static float deltaBuffer = 0; // add in whatever time we currently have saved in the buffer delta += deltaBuffer; // save off the delta, we will need it later to update the buffer const float oldDelta = delta; // use refresh rate as delta delta = 1.0f / (float)GetMonitorRefreshRate(); // update delta buffer so we keep the same time on average deltaBuffer = oldDelta - delta;

This simple method fixes the issue by calculating what the delta will be at the end of the frame. On a modern operating system like Windows the timing can vary quite a bit between the vsync and the beginning of the next update which is what causes the variance. If we had a system where we knew that our update would always start at consistently the same time after the vsync then there wouldn’t be an issue, but even on a game console there is going to be a slight variance. Also I am simplifying things a bit by saying that we are predicting the time of the vsync, in reality there is no way of know when exactly the vsync will happen (what it’s phase offset is) but that doesn’t matter as long as the phase offset is constant.

I’m sure this will be confusing to some people because the fixed time step and interpolation stuff is already a major hurdle to get over. This adds yet another layer of abstraction to how time is treated within the engine. However I think that some method of time delta smoothing is essential for any game running vsynced even if it doesn’t use a fixed time step or interpolation. My game engine is a solid example of how smooth this system can look. The difference between this and a non-vsynced version is tremendous even though it runs at about 150 fps when non-vsynced on my pc. Try it yourself by toggling vsync in the debug console by pressing ~ to bring up the console and typing “vsync 0”.

- Frank Engine Test Game – Requires Windows and DirectX

Basically, with vsync + fixed step, at some intervals the delta remainings accumulated will make your engine produce 2 time steps and a single render, with means from one frame to another everything will move twice, double the distance of a usual single frame, though it will be not exactly displayed that way due the interpolation value, it still not fix the stutter(the single frame “jump”).

Did I get it correctly? At least thats what Im understanding from the stuttering of my engine.

Im trying a fixed step loop with your delta smoothing, although I can definitely see an improvement (much less stutter), its still there =(

Instead of feeding the fixed loop with the timer delta, I feed it with with the smoothed delta from your method, If I understand correctly.

Heres my loop: link

(check the SmoothedFixedStepLoop() )

Im using the refresh rate from the dx swap chain adapter, returned from display mode (refresh.nominator/refresh.denominator), which is 59.939..something.. I dont get why its not a round number, but its not a round number >.>

And now I have to say, wow, great works man. You are basically where I want to be. Single dude making great games with its own engine. Congrats.

I’m not sure what you mean by the 2 time step jump thing, though a gap that large can occur, it is all kinds of fluctuation that looks like stutter. It has to do with the measured time delta fluctuating greatly from what it would be when the image is seen. When you are using vsync the delta you apply should always be a multiple of the vsync interval which is the inverse of the frame rate.

Your code looks like it should work but stutter can be caused by many other things. You might have performance issues, but getting the time step correct is an important part.

Also, I think that your refresh rate is actually 60. The reason it is reported wrong is probably because at some point it was calculated based off the inverse of your vsync interval that was stored as a float, something like .016666 which is not a perfect accurate representation of 1/60. You might want to try rounding to the nearest refresh rate, then take the inverse of that.